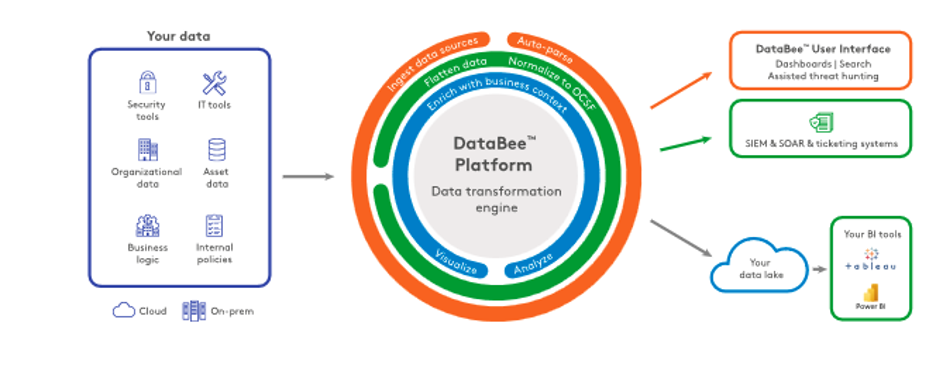

Big data keeps getting bigger. Security teams are struggling to leverage big data because they may have over a hundred security tools, often leading to millions of sensors that generate data in disparate and proprietary formats. To help make sense of this data, they need to break down silos by bringing data together through transformation with normalization so that different internal stakeholders have access to it.

To help make sense of data, the data fabric architecture emerged to facilitate the end-to-end integration of various data pipelines and cloud environments through intelligent and automated systems. This may include aggregation and normalization of data and indexing and cataloging of data from and across distributed sources. This enables organizations to have easier access to the data, enabling the ability to gain deeper insights across the data. This differs from a typical data lake in that data in the data lake may be disconnected, unrelated, and in various structures, while the data fabric typically provides a unified, cohesive view of the data.

What is a data fabric?

A data fabric architecture is designed to be a more comprehensive and more centralized approach to data management. The approach for consolidating this data into a single data fabric provides additional benefits such as helping to ensuring data quality and integrity, data trust and lineage, and providing a data layer that can form the foundation for building multiple use case for different data consumers. With a security data fabric, security practitioners can contextualize data and work with other internal stakeholders across compliance, IT, and senior leadership teams.

What is a security data fabric?

A security data fabric is a data fabric architecture that integrates and manages security data from various sources in a unified, secure, and governed approach. It is designed to navigate complex security semantics, streamlining workflows for security, risk, and compliance.

The security data fabric architecture overlays security data and telemetry with business data to create a single, accessible, and time-series enriched dataset by normalizing and analyzing data from various locations, including:

- Asset and CMDB informing

- Organizational and business data

- SIEM tools

- Cloud platform information

- Network and on-premise infrastructure Application logs

- SQL databases

- Operating system logs

- Endpoint security logs

- Vulnerability data

- Alerts and security findings

- Authentication and User information

- Threat intelligence

- And more

By aggregating, contextualizing, and correlating vast quantities of data, security data fabrics continuously bring in data from on-premises, cloud, or hybrid systems and tools in any data structure and format. The flexible, modular architecture when paired with a data lake reduces costs associated with data while enabling high performance analysis for operational and analytical use cases for multiple data consumers.

Security data fabrics enable organizations to identify and respond to security threats in real time, provide a comprehensive view of their security posture and controls, manage network operations, and more.

What are some use cases solved by a security data fabric?

The security data fabric makes it possible to gain greater value from security data by delivering better security and compliance decisions using connected insights from security and IT data enriched with business context.

Continuous controls monitoring

When GRC professionals have access to a security data fabric, they gain the dynamic insights they need for continuous controls monitoring (CCM). A security data fabric enables them to weave together:

- Security telemetry

- Business logic and policies

- Asset owner details

- Organizational hierarchy information

With continuous access to enriched data insights, compliance professionals build CCM dashboard at-scale with visualization tools, giving them year-round insights for real-time and historical controls and compliance trends.

Security hygiene

Cybersecurity and compliance practitioners using a security data fabric have cleaner and more complete asset and asset owner information within an organization's data estate. Security hygiene using data woven from across IT and security solutions enriched with business context helps answer the ‘who’ and ‘what’ questions about your asset inventory.

Threat hunting

Threat hunters can use a security data fabric to review and correlate traditionally siloed data, enabling them to use Python, SQL, and other languages to craft artificial intelligence (AI) and machine learning (ML) models that learn from their data. A security data fabric with entity resolution capabilities can monitor a user, device, or asset and provide an entity-centric timeline view, preventing security teams from sifting through logs and manually correlating entity information.

By parsing, normalizing, and enriching the data collected from disparate sources, threat hunters can create a collaborative yet programmatic workflow by:

- Developing use cases

- Capturing and storing data

- Creating baselines

- Launching queries against data to identify anomalies

Since security data fabrics engage early with data sources and, when integrated with a data lake, remove compute constraints, threat hunters have clean data that enables them to conduct multiple, simultaneous, complex hunts from large-scales historical datasets.

Incident detection and response

Security teams can use a security data fabric to augment or displace their SIEM’s capabilities by incorporating security analytics. The security data fabric enables collaboration between the subject matter expert security analysts and the analytics expert data scientists. By working together, data scientists can build behavior models and ML projects that security teams use to analyze data and display security metrics for faster incident detection.

Additionally, the security data fabric’s ETL pipelines normalize all data before storing it in a security data lake where they can:

- Access the raw or optimized data

- Retain historical data for full timeline investigations

- Create a single repository for security analytics workloads

By transforming the data early on, security teams reduce the compute power for and time spent waiting during an investigation.